vLLM Optimization Techniques: 5 Practical Methods to Improve Performance

Running large language models efficiently can be challenging. You want good performance without overloading your servers or exceeding your budget. That's where vLLM comes in - but even this powerful inference engine can be made faster and smarter.

In this post, we'll explore five cutting-edge optimization techniques that can dramatically improve your vLLM performance:

- Prefix Caching - Stop recomputing what you've already computed

- FP8 KV-Cache - Pack more memory efficiency into your cache

- CPU Offloading - Make your CPU and GPU work together

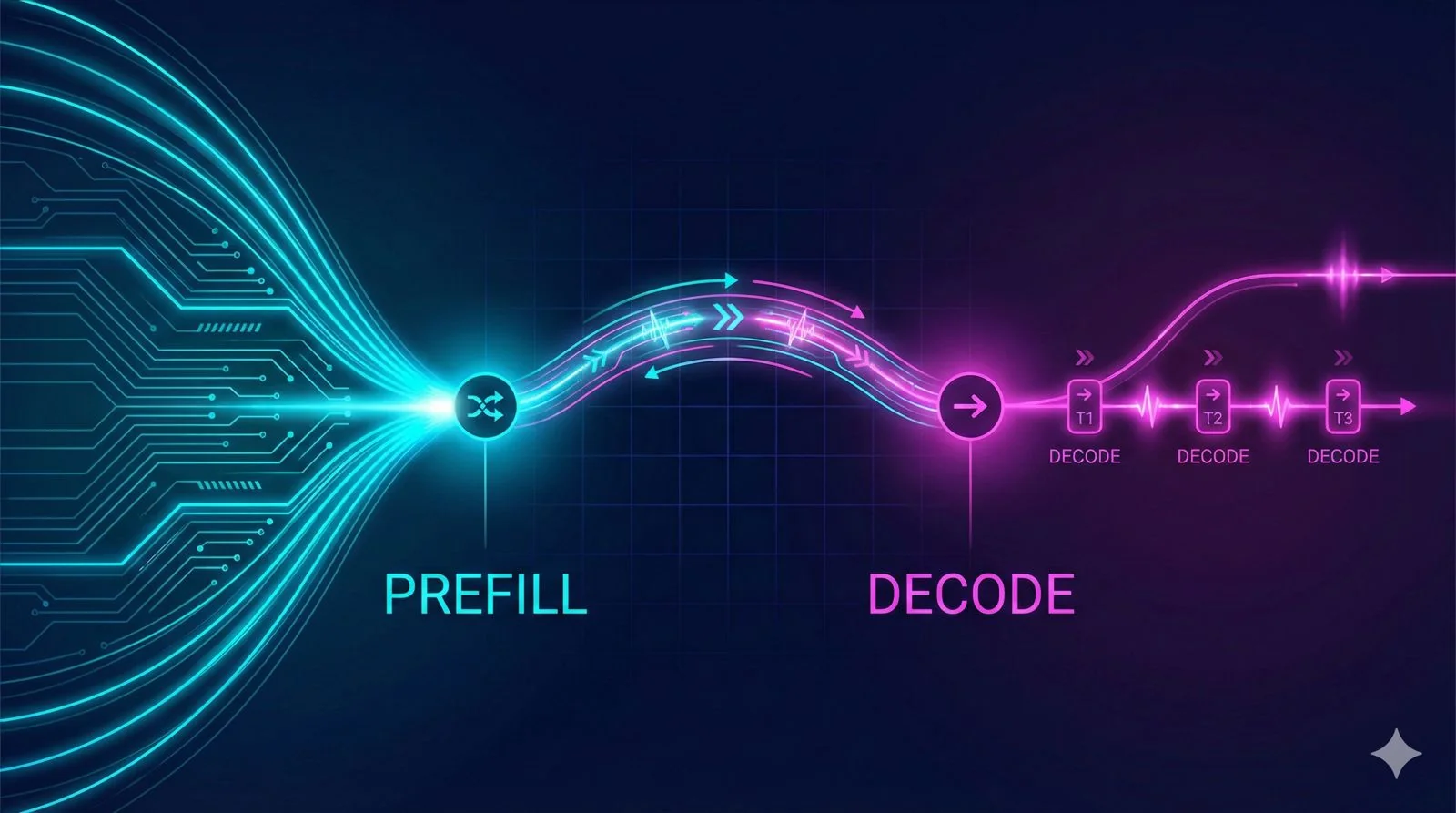

- Disaggregated P/D - Split processing and serving for better scaling

- Zero Reload Sleep Mode - Keep your models warm without wasting resources

Each technique addresses a different bottleneck, and together they can significantly improve your inference pipeline performance. Let's explore how these optimizations work.